Have you ever seen someone and although you might not know them you feel a deep sense of trust? Or what about looking at a picture with features of a face and immediately identify it as a person you know? It might seem like random moments but there is a deep neurological process that performs the task of facial recognition. In fact the process is so detailed that a large part of the recognition abilities is dependent on the eyes.

In 1992 a man by the name of Charles Gross, conducted experiments on monkeys to understand the activation of the temporal lobe and their response to complex stimuli. What he discovered was that simple shapes and objects did not trigger certain neurons in the brain. However, when the monkeys were shown faces, specific neurons were activated. This led to further study and an understanding that certain parts of the brain are integral parts of facial recognition. Not only did they discover the specificity of the brain in identifying faces, but there is a structure of identification and labeling that takes place. When the brain categorizes the face, it is identified in a process called population coding. This is where neurons fire due to the features that make up a face being registered. Sparse coding is the secondary tier of identification where specific neurons are activated by selecting specific features that make up a face such as nose, eyes, mouth, etc. This can have overlap between faces but never the same amount of stimulus. Specificity coding is the most detailed part of neuron firing and is the third step along the process of facial recognition. This is when specific neurons fire when identifying details or differences in the features. An example could be the firing of neurons to angular eyes but not round eyes. The combination of all of this is how we identify faces. The brain not only can identify what it's looking at as a face but it can perceive characteristics such as male or female and at a deep level is can add specificity like a name to a face. There is a vast neural network that is structurally connected and for simultaneously when identifying face. This complexity of a variety of activated areas of the brain is called distributed representation.

There are about 7 areas in the brain that contribute to facial recognition. These areas are the Occipital Facial Area (OFA), Fusiform Face Area (FFA), Superior Temporal Sulcus (STS), Anterior Temporal Lobe (ATL), Interior Facial Gyrus (IFG), the Amygdala (AMG), and the Orbital Frontal Cortex (OFC). The ability to distinguish facia features is a function performed in the FFA. The area that is sensitive to face orientation is the STS, which will be relevant to the eye gaze study I will discuss further. The ATL region is what helps put a name to a face and capture knowledge about that face. The AMG stimulated emotional reactions to faces and the OFC is associated to rewards, such as trustworthiness and attractiveness.

In the article and study “Eye Gaze and Personal Perception” by Neil McCrae; there is an interesting finding that faces are easier to identify by their facial orientation as well as the eye gaze. The more frontal view and eye contact you receive will contribute the speed of recognition. The reasoning for this was contributed to high stimulus in the area know as the Superior Temporal Sulcus. This is the area that has been identified as the hotspot for recognizing face position and eye gaze. In this study, it consisted of 18 women and 14 men and a set of 48 pictures. These pictures were frontal (12), 3/4 view (12), Lateral view (12), and the last was eyes closed front view (12). Subjects would view the images in a random order and the task was to identify the image as male or female. The results showed that the closer the images were facing forward, the identification times were faster. The results decreased in speed as the face moved towards lateral and the eyes closed recorded the slowest times despite the face being forward. What we learn is that mutual eye contact stimulates strong emotional responses that are triggered in the amygdala for the function of identification.

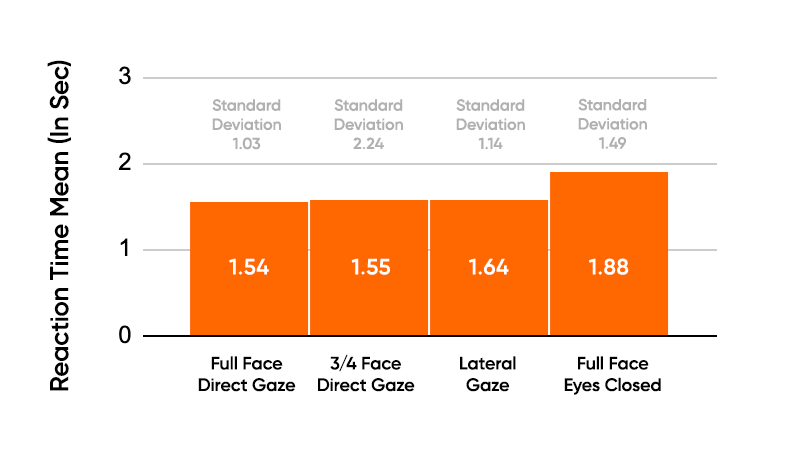

I recreated this experiment in a classroom setting to see if the results would match those of McCrae’s study. The Participants involved in this experiment were a mix of male and female and around the ages of 20-30 years of age. There was a total of 39 participants and their task was to see a selection of images and identify whether they were male or female. The images were full face direct gaze, full face eyes closed, 3/4 face direct gaze, and lateral face. The results would show the speed of identification from each face position. The experiment was conducted on the computer screen with alternating images at random with the different face positions. The independent variable in this experiment was the face orientation while the defendant variable was the speed of identification for each orientation.

After completing the experiment the class results appeared to be consistent to those in McCrae’s article. The fastest reaction time was recorded when the image was full face and direct gaze while the slowest reaction times was full face eyes closed. It’s interesting to note that the difference in eyed open and closed made a stronger impact than face position. If you organize from fastest reaction times to the slowest, you would find that the further the face is from being frontal the harder it is to identify. The slowest reaction is attributed to the lack of gaze which is harder to identify when there is no eye contact.

Another interesting note related to the study is the idea that the STS & ATL regions not only identifies wether the face is male or female but can assist in the process of putting a word association to a face. This biological feature is most likely caused by the person-construal process that helps them quickly identify and make decisions about someone. This is exactly the hypothesis they set out to prove. That our brains can identify, categorize, and make decisions about others almost instantaneous.

Consider how eye contact in different contexts would affect cognitive processing. What effects would this have in a school setting, in the workplace, or our on the streets? From personal experience, I have always felt that strong eye contact indicates confidence and a sense of interest in a conversation. However that is not always the case. I can think back to the moments when I would get caught in a lie, and how I would avoid eye contact from my parents. It is as if I was subconsciously trying to make it harder for them to identify my lie. But not everyone experiences these concepts the same way. In Japanese culture they avoid eye contact as a sign of respect while in some Arabic countries eye contact is a way of identifying if a person is trustworthy. If we were to conduct this experiment with people who culturally avoid eye contact would the results be the same? It’s unclear, but I believe the results would stay fairly consistent to those in McCrae’s study. The reason I believe that is because the brain is specifically tailored to function in this ways. The regions of the brain such as the STS, ATL, AMG, and others have built in capacities to identify those characteristics in faces. Reaction times might be slower, but overall the order of fastest identification and slowest identification should remain the same. What is your experience with eye gaze and facial recognition? Do you find the results true, or does your experience reflect something different?